T Ashok @ash_thiru on Twitter

Summary

In article are seven interesting thoughts each outlined as a picture on what it takes to do SmartQA. These are on Smart thinking, Smart Understanding, Smart Design, Smart Plan, SmartQA Test Organisation and Smart Planning.

SmartQA Thinking

Yesterday a good friend of mine told me about his recent conversation with a senior engineering manager in a product dev org. The Sr Engr Manager, a great believer in code coverage told him that he just focuses on covering close to 100% code as the only measure to ensure quality, and as a means to implement shift-left. Absolutely right, isn’t it? After all, ensuring all code written is at least executed once & validated is logical and necessary.

What is/are the fallacy in this? (1) Well you have assumed that all code needed has been written (2) Well, non-functional aspects of code cannot be completely validated (3)Well, it assuming that this is what users really wanted, even if code is working flawlessly (4) Well, the number of paths to cover at the highest level of user oriented validation is just to many to cover, next to impossible! Code coverage is a necessary condition but simply not sufficient.

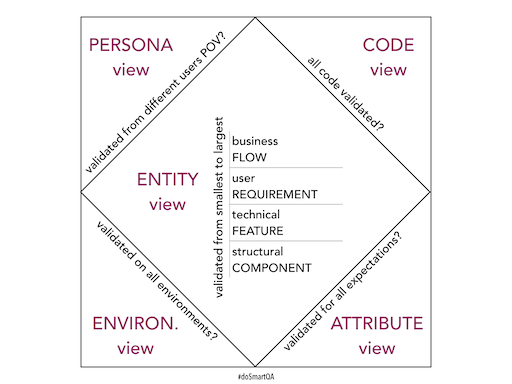

Doing SmartQA requires multidimensional thinking, of looking of the system from various angles both internal in terms of code, architecture and technology and external in terms of behaviour, end users, environment & usage and then making appropriate choices of what to validate later or earlier and what to prevent or detect statically.

Smart Understanding

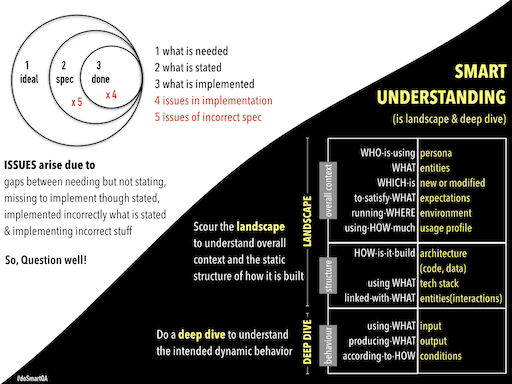

Prevention occurs due to good understanding. Detection occurs due to good understanding. Understanding of what is needed, what is stated and what is implemented.

Doing SmartQA is about great mental clarity of visualising what is intended, what is present, what-may-be-missing that could-be added to enhance the experience. The intent to seek this clarity is what one drives to question well, build better, prevent and detect issues.

The act of testing is really discovering what-should-be-there but-not there, what-is-there but not correct, what-should-be-there but should-not-be-there. Finally it is about understanding the impact of something that been changed, be it in the system or outside the system.

Smart Understanding is about scouring the ‘landscape’ to understand overall context and the static structure of how it is built and then ‘deep-diving’ to understand the intended dynamic behavior. Landscape and Deep dive are great mental tools to explore the system rapidly to do SmartQA. The associated picture illustrates these two thinking tools well.

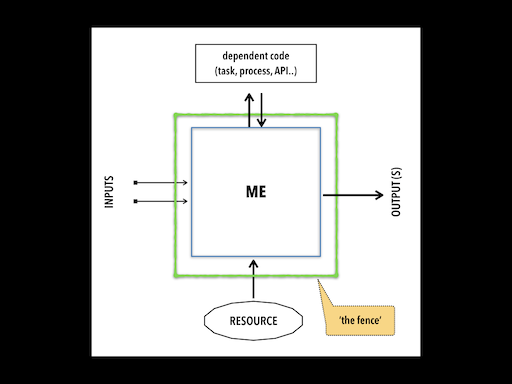

Smart Design I

Doing SmartQA is not just evaluation. It is about enabling code that is being built to be robust. To resist errors creeping in, to code-in firewalls. To ensure that I have all I need in good condition before I consume it. This implies that data (inputs) process are clean, the environment I operate in is clean and the resources I need are indeed available, and the dependencies that I have on others are indeed working well.

All I do is to protect myself. How can I handle when irritants are hurled at me? Well I have three choices :

(a) reject them and not do what I am supposed to do (b) flag them (log) and not do what I am supposed to do (c) intelligently scale down and do lesser.

The key focus is be robust, to be disaffected by inputs, configuration/settings, resources or dependent code. The act of designing for robustness makes one sensitive to potential issues that may arise and ensuring we are edged into a corner.

SmartQA Design II

Let us talk a bit of test design now. We focus a lot of execution, and therefore the ability to cover more. The focus has veered to how frequently we are able to execute the tests and therefore on automation. Let’s step back and ask to what the objective was, it was to primarily deliver clean code. So a deeper sensitivity to the quality of tests. This is where design becomes important.

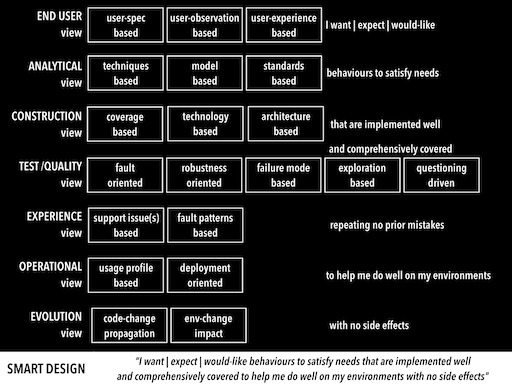

So what is a smart way to design to come with good scenarios, ideally few that can uncover issues that matter most. Smart Design is about looking at the system from multiple views to ensure:

“I want | expect | would-like behaviours to satisfy needs that are implemented well and comprehensively covered to help me do well on my environments with no side effects”.

Then decide what you want to prevent, statically detect or test, be it via human or a machine. Focus on intent and then the activity.

SmartQA Test Organisation

Once upon a time software engineers developed code and also tested them. Then dedicated QA teams became up the norm of day and testing was ‘owned’ by these teams. In current times with rapid dev driven by Agile, it is kinda merging back into dev, with dedicated QA becoming thinner. A recent article in SD times talked out “who owns QA” – Is it dev org or a dedicated org? So what is the right fit?

What ‘dedicated QA’ really means is – focus on QA/testing is indeed there. In a software org we need specialists, be it analysts, architects, developers, support and testing too. Dedicated QA does not mean reporting only into dedicated QA leadership position, it just means that we have QA specialists who have deep knowledge to systems validation.

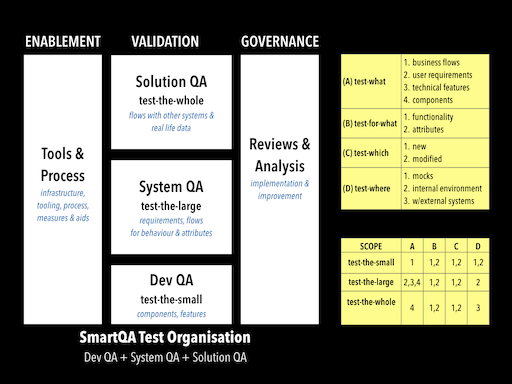

So what may be a right fit for building a Smart QA organisation? Think of QA as a mixture of Dev QA, System QA and Solution QA with a different objective of validation.

In addition to validation, a dedicated QA (System/Solution QA) is suited well to provide enablement & governance. This implies test infrastructure setup, tooling frameworks, setting up process, metrics, publishing aids and doing reviews and improving the system.

Smart Validation

The approach to validation of software has typically been ‘manual’ or ‘automated’ Nah, that is really not the right phrase, it is really ‘human-powered’ and ‘machine-assisted’. So when we decide to validate in a non-automated manner, what could be smart ways of ‘doing’ ?

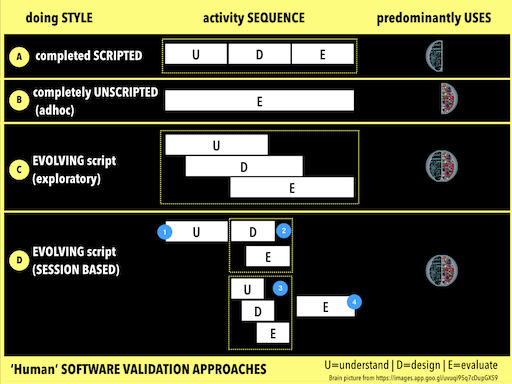

Well, there are FOUR approaches :

A: Completely scripted, where we understand completely, then design and then evaluate. The typical way we do, when specifications are reasonably clear and complete, mostly employing the logical left brain to the hilt.

B: Completed unscripted, the typical approach to test when faced with severe time crunch or by a casual person wherein one jumps into evaluation largely guided by one’s creativity, luck and experience.

C: Evolving script, an exploratory approach where understanding, design and execution happen concurrently using a good mix of left and right brain.

D: Evolving script done in short sessions, where we setup up a clear objective of what we want to accomplish/do and then perform ‘only understanding’, or ‘understand & design’ or ‘understand, design & evaluate’ and only largely ‘evaluate’ using a good mix of left and right brain.

What approach you chose depends upon the context. You may veer towards (A) when specs seem clear, you may end up using C or D when system is evolving are when specs are at a higher level. whilst B is useful to complement A,C,D as quick checks or exploit the power of random.

Oh, “Immersive session testing” builds upon this and attempts to enable one to ‘immerse’ in the act of evaluation, to get into a state of ‘flow’. It is a session-based approach that has a suite of ‘thinking tools’ to understand, design and evaluate with the prime objective to ‘doing less and accomplishing more’.

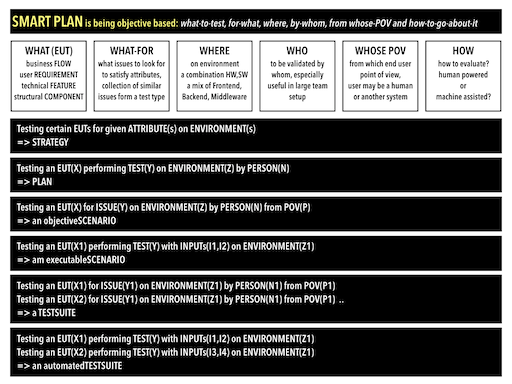

Smart Plan

Smart plan is simple & objective based – what entity to test, test for what issue(by conducting what test), test in what environment, test by whom (in case of a team), test from whose point of view ad finally how to test (human or automated). A concise plan that is a collection of these, enables sharp focus enabling rapid evaluation, be it human powered or building nimble automated suite(s).

A higher level of what kind of issues to be uncovered at different levels of entities on specific environment(s) would be a strategy, whilst specific issues on specific entities would form a plan. Oh this form of thinking facilitates two kinds of scenarios to be designed: (1) an ‘objectiveScenario’ from an ‘output focus’, that are simple one-liner(s) that outline what issues to uncover for what-entity on which environment (2)an executableScenario from ‘input focus’, that is a set of inputs to stimulate with, for an entity to uncover specific issues for a given environment.