T Ashok @ash_thiru

Summary

This article is a set of brilliant ideas curated from four articles with the first suggesting ten ways to build high quality into software, second one from Scaled Agile framework outlining the clear definition of Done, the third highlighting how lean thinking and management helps, and the last outlining how Poka-Yoke can help in mistake proofing.

Building Quality In

“When looking at quality from a testing perspective, I would agree that it is not possible to build software quality in. To build quality in you need to look at the bigger picture. There are many ways to improve quality. It all depends on the problem. Maybe, you can automate something that previously had to be done by a human being. Maybe, you need training to better use the tools you have. Maybe you need to find a better tool to do the job. Or maybe, you need a checklist to remind you of what you need to look at. The possibilities are endless.

That’s not what I’m talking about when I talk about building quality in. Building in quality requires a more general, big-picture approach” says Karin Dames in the insightful article 10 Ways to Build Quality Into Software – Exploring the possibilities of high-quality software and outlines TEN guidelines to consistently build quality into software:”

1. Slow down to speed up

You either do it fast, or thoroughly.

2. Keep the user in mind at all times

The story isn’t done until the right user can use it.

3. Focus on the integration points

Integration is probably the biggest cause for coding errors, understandably.

4. Make it visible

Spend time adding valuable logging with switches to to switch logging on and off on demand.

5. Error handling for humans

What would the next person need to understand this without having to bug me?

6. Stop and fix errors when they’re found

Done means done. End of story. Don’t accept commonly accepted levels of errors.

7. Prevent it from occurring again

Do RCA to uncover what caused the problem from happening in the first place and put a measure in place to prevent it from happening again.

8. Reduce the noise

Good design is simple. Good design is also good quality.

9. Reduce.Re-use.Recycle.

Focus on maintainability. A code base is organic. Factor in time for rewriting code and cleaning up code, just like you would spring clean your house regularly or clean up your desk.

10. Don’t rely on someone else to discover errors

Just because it’s not your job, doesn’t mean you shouldn’t be responsible. If you see something wrong, do something about it. If you can fix it, do it. Immediately.

Read the full article at 10 Ways to Build Quality Into Software – Exploring the possibilities of high-quality software

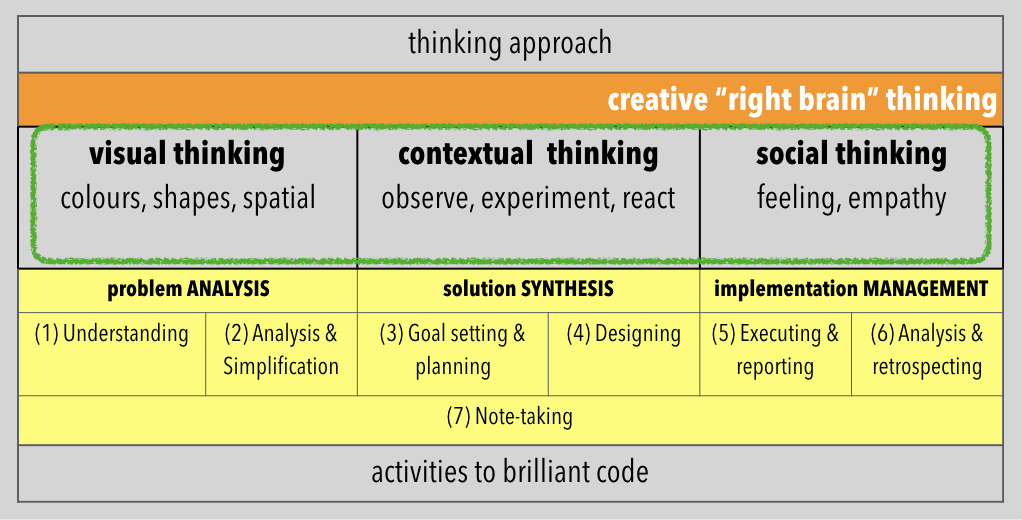

Reactive vs Proactive Quality Management

“To understand how to build quality into our products from the very beginning, we need to understand why this is not happening naturally. The most common way of preventing defects from reaching customers comes down to introducing a great number of inspections and countless KPI or metrics into the process. The problem with this approach is that it is reactive. And wasteful. If we think in the context of the value streams, neither inspections nor metrics add any value to the customer. At best, they help you discover and react to already produced defects. At worst, they encourage playing the system – you get what you measure.” is what the insightful article “The Built-In Quality Management of Continuous Improvement” says.

The article continues on to outline the way how lean management views the issue of quality and defects is through the lens of value and continuous improvement.

Shifting into proactive quality management

Lean management views the issue of quality and defects is through the lens of value and continuous improvement

- Value-centered mindset means everything you do needs to be bringing value to your client. Your client is anyone who receives the deliverable of your work.

- Waste-conscious thinking helps remove whatever is not adding or supporting value. This results in fewer redundant metrics or steps in a process.

- Continuous flow of work encourages working in smaller batches. This reduces the risk of larger defects, makes fixes easier and establishes a smooth delivery flow.

- Bottlenecks are removed or guarded for the sake of the flow. If a work stage that adds a lot of value but is taking too much time, the cost of delay for the rest of the process might overcome this value.

- Pull-powered flow means efforts and resources should not get invested into the things irrelevant to your stakeholders.

- Upstream leadership empowers the person who is doing the work to elevate issues letting you cut the issues at the root.

- Analysis and continuous improvement. Applying the Lean principles once won’t do the trick. Continuously analyze your work, outcomes, mistakes and build on that.

Want to know more, read the full article “The Built-In Quality Management of Continuous Improvement” .

Scalable Definition of Done

The interesting article Built-In Quality states “Definition of Done is an important way of ensuring increment of value can be considered complete. The continuous development of incremental system functionality requires a scaled definition of done to ensure the right work is done at the right time, some early and some only for release. An example is shown in the picture below, but each team, train, and enterprise should build their own definition.”

Copyright Scaled Agile Inc. Read the FAQs on how to use SAFe content and trademarks here: https://www.scaledagile.com/about/about-us/permissions-faq/. Explore Training at: https://www.scaledagile.com/training/calendar/

Read the full article The Built-In Quality Management of Continuous Improvement.

On a closing note “Have you heard of Poka Yoke?” Poka Yoke means ‘mistake-proofing’ or more literally – avoiding (yokeru) inadvertent errors (poka). Its idea is to prevent errors and defects from appearing in the first place is universally applicable and has proven to be a true efficiency booster.

Poka Yokes ensure that the right conditions exist before a process step is executed, and thus preventing defects from occurring in the first place. Where this is not possible, Poka Yokes perform a detective function, eliminating defects in the process as early as possible.

Poka Yoke is any mechanism in a Lean manufacturing process that helps to avoid mistakes. Its purpose is to eliminate product defects by preventing, correcting, or drawing attention to human errors as they occur.

One of the most common is when a driver of a car with manual gearbox must press on the clutch pedal (a process step – Poka Yoke) before starting the engine. The interlock prevents from an unintended movement of the car.

When and how to use it?

Poka Yoke technique could be used whenever a mistake could occur or something could be done wrong preventing all kinds of errors:

- Processing error: Process operation missed or not performed per the standard operating procedure.

- Setup error: Using the wrong tooling or setting machine adjustments incorrectly.

- Missing part: Not all parts included in the assembly, welding, or other processes.

- Improper part/item: Wrong part used in the process.

- Operations error: Carrying out an operation incorrectly; having the incorrect version of the specification.

- Measurement error: Errors in machine adjustment, test measurement or dimensions of a part coming in from a supplier.

If you are keen to know more, read the full article What is the Poka Yoke Technique?

—

References

1 Karin Dames, “10 Ways to Build Quality Into Software – Exploring the possibilities of high-quality software“.

2. From Scaled Agile Framework Inc, “Build In Quality”.

3 From kanbanize.com , “The Built-In Quality Management of Continuous Improvement“.

4. From kanbanize.com , “What is the Poka Yoke Technique?”.